Project

- Work Program

- Objectives

- Use Cases

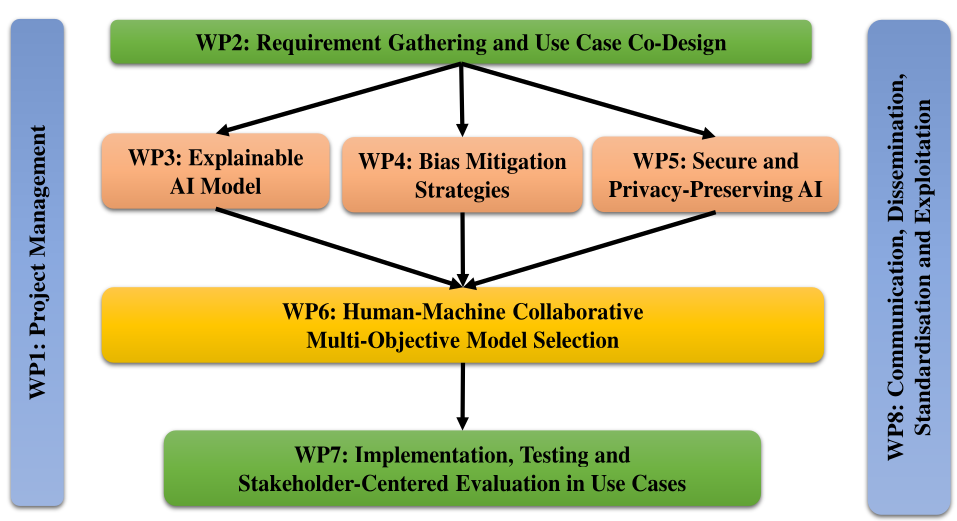

To tackle the technical challenges and achieve the R&I objectives, HarmonicAI proposes a diverse range of actions that are organised into eight work packages (WPs), as shown in figure, in which WP2–WP7 are R&I actions, while WP1 and WP8 are dedicated to project management, communication, dissemination, standardisation and exploitation.

The project runs from January 2024 to December 2027.

WP1: Project Management

Leader: UÉvora

Period: From M1 to M48

WP1 mainly provides the coordination of scientific and administrative activities. Project management, budget allocation, and reporting activities will all be coordinated as part of this work package. This work package will be led by UÉvora, who has extensive experience in project management.

WP2: Requirement Gathering and Use Case Co-Design

Leader: DCU

Period: From M1 to M14

WP2 is focused on requirement gathering and use-case co-design. Consequently, the key task is to gather requirements and expectations from healthcare stakeholders for the design and evaluation of trustworthy AI solutions and establish high-level requirements for technical research activities of the project. We will then define relevant and convincing use cases in view of promoting the capabilities of developed solutions.

WP3: Explainable AI Models and Evaluation Metrics

Leader: BUL

Period: From M9 to M30

WP3 is geared towards developing explainable AI models and evaluation metrics. Harmonic AI will develop explainable AI models with respect to use cases. We will establish formal quantitative evaluation metrics for the quality of AI explainability in the healthcare domain. Lastly, the WP will propose a design framework for explainable AI in digital health.

WP4: Bias Mitigation Techniques and AI Fairness Metrics

Leader: JYU

Period: From M9 to M30

WP4 aims to develop bias mitigation techniques, AI fairness metrics, and operational guidelines. The WP will design bias mitigation techniques to improve AI fairness with respect to use cases. WP will also explore fair AI operational guidelines for healthcare organisations. Lastly, we will establish unified quantitative AI fairness evaluation metrics.

WP5: Hybrid Learning Paradigm for Secure and Privacy-Preserving AI

Leader: UREAD

Period: From M9 to M30

WP5 will propose a three-tier privacy-preserving hybrid learning paradigm for prioritised predictions. It will integrate state-of-the-art privacy-enhancing technologies into the proposed hybrid learning paradigm. The WP will develop security mechanisms to defend against attacks on the cyber-physical system, AI models, and datasets. Lastly, the WP will establish a quantitative metric to measure global privacy loss.

WP6: Human-Machine Collaborative Multi-Objective Model Selection

Leader: TUDelft

Period: From M26 to M38

WP6 will integrate the proposed explainability, fairness, and privacy innovations into a unified AI pipeline. We will develop a human-guided multi-objective model selection algorithm. Further, we will develop a human-machine co-design framework for domain-specific, requirement-oriented trustworthy AI in digital health.

WP7: Implementation, Testing, and Stakeholder-Centered Evaluation in Use Cases

Leader: ULL

Period: From M37 to M48

WP7 will implement the proposed explainable, fair, and private AI solutions in use cases. The WP will evaluate the proposed human-machine collaborative multi-objective design framework for trustworthy AI. Lastly, WP will evaluate the proposed guidelines for AI practitioners and healthcare stakeholders.

WP8: Communication, Dissemination, Standardisation and Exploitation

Leader: UOL

Period: From M1 to M48

WP8 will coordinate communication, dissemination, standardisation activities and exploitation planning to maximise the project’s scientific, industrial and societal impact, ensuring sustainability of results beyond the project lifetime.

Research Objectives (RO)

RO1: Stakeholder Perception

Investigate healthcare stakeholders’ perceptions of current use of AI in digital health and gather requirements for the design of trustworthy AI solutions.

RO2: Explainable AI

Build accurate, measurable, and explainable AI models to improve AI transparency for digital health.

RO3: Fairness through De-biasing

Establish a de-biasing strategy that contains a portfolio of technical and operational actions for fair AI deployment in digital health.

RO4: Privacy-preservation

Develop a secure and privacy-preserving hybrid ML/DL learning paradigm for timely, prioritised, and accurate predictions.

RO5: Human-Machine Collaboration

Develop a human-machine collaborative multi-objective model selection framework for coherently explainable, fair, and privacy-preserving AI in digital health.

RO6: Trustworthy AI

Implement, test, and evaluate the developed trustworthy AI solutions in real-world digital health use cases.

Knowledge Transfer Objectives (KT)

KT1: People

Enhance the research and innovation potentials and career prospects of participating researchers, including early career researchers (ESRs) and experienced researchers (ERs).

KT2: EU R&I Potential

Strengthen EU’s competitiveness and leading position in the development of human-centric, sustainable, secure, inclusive, and trustworthy AI.

KT3: Link

Strengthen existing research links among the leading researchers and institutions that allow the exchange of knowledge and professional training. Develop new and sustainable cross-disciplinary, cross-sector, and cross-border collaboration to build up world-leading overarching technologies and systems related to the future trustworthy AI in digital health.

KT4: Dissemination

Effectively disseminate and exploit the outcomes of the project through the project website, social media, publications, and outreach activities.

KT5: Communication

Engage the public in the research and innovation activities and communicate research outcomes with the public to promote AI and digital health technologies and applications.

The research outcomes will be implemented in three use cases as an effective way to transfer knowledge across academia, industry and healthcare sector. The innovative explainable, fair and privacy-preserving AI models with respect to use cases will bridge the gap between theoretical AI research and real-world healthcare applications. They are also an instrumental tool for effective knowledge sharing.

UC 1: Trustworthy AI for physiotherapy rehabilitation in UK

Leader: UOL

Participants: UOL, UNN, BUL, UREAD, MLA

UC 2: Trustworthy AI for hospital resources management in France

Leader: UJM

Participants: UJM, ULL, TUDelft, AMC, ULSBA

UC 3: Trustworthy AI for elderly mobility disorder prevention in Thailand

Leader: CMU

Participants: CMU, UÉvora, JYU, UOA, DECSIS, DCU